If you’ve been around AI long enough, you’ve probably heard these terms thrown around like they’re interchangeable.

Training.

Fine-tuning.

RAG.

Fine-tuning.

RAG.

They’re not the same. Not even close.

And if you’re running a business and trying to decide where to invest your time and money, misunderstanding this spectrum can cost you a lot of both.

So let’s slow this down and walk through it the way we did in our discussion. No hype. No buzzwords. Just how this actually works.

And yes, I’m going to borrow one idea from computer architecture, but I’ll keep it human.

Start at the Core: Training a Model From Scratch

Let’s begin at the extreme end of the spectrum.

Training a model from scratch means you’re not just adjusting behavior. You’re building the model’s brain from zero. You initialize all the weights randomly and teach it everything through data.

The architecture itself, like the transformer layers, attention heads, hidden dimensions, that part is not the hard thing anymore. Frameworks like PyTorch and TensorFlow make defining architectures almost mechanical.

The hard part is the training.

Training requires:

- Massive datasets

- Massive compute

- Careful tuning of learning rate, batch size, optimizer, and more

- Weeks or months of GPU time if the model is large

Could you take something like an open model architecture and reinitialize all the weights and train it yourself?

Yes.

Should a normal business do that?

Almost never.

This is research lab territory. Frontier labs. Universities. Heavily funded teams.

For small and mid-sized businesses, this is not where the ROI lives.

The Middle Ground: Fine-Tuning (Also Called Retraining)

Now this is where things get interesting.

Fine-tuning means you start with a model that already knows a lot about the world. It has general reasoning ability. Language understanding. Broad knowledge.

Then you gently adjust it using your own dataset.

You don’t want to erase its brain. You want to nudge it.

This is where parameters like learning rate and batch size matter.

The learning rate is literally how aggressive the updates are. Set it too high and the model forgets what it already knew. Set it too low and it barely adapts. It’s usually a very small number, like 0.00001 or 0.0001.

Batch size controls how many input-output examples are processed before the weights get updated. The model doesn’t change its weights after every single example. It processes a batch, calculates the average gradient, and then updates once.

And if you push those updates too aggressively, you risk triggering catastrophic forgetting, where new knowledge quietly overwrites what the model previously understood.

So in fine-tuning, you’re basically saying:

“Keep most of what you know. But adjust yourself slightly in this direction.”

And yes, this still requires serious hardware. Not frontier-lab level, but real GPUs, real memory, real engineering.

Now here’s the real question.

When is this worth it?

If your business logic is mostly about retrieving facts and applying structured rules, you probably do not need fine-tuning.

If your domain requires deep reasoning patterns that are proprietary, nuanced, or very hard to express through rules, then fine-tuning starts to make sense.

Biotech research? Maybe.

Highly specialized financial modeling? Possibly.

Massive legal reasoning datasets with proprietary structure? Could be.

Highly specialized financial modeling? Possibly.

Massive legal reasoning datasets with proprietary structure? Could be.

Small plumbing company chatbot?

No.

The Other End: RAG (Retrieval-Augmented Generation)

This is where most businesses should start.

RAG does not change the model’s weights.

Let me say that again because this is where confusion happens.

RAG does not retrain the model.

Instead, it feeds the model additional data at runtime.

Think of it like this.

The model has its internal knowledge. That is fixed. That came from training or fine-tuning.

But when a question is asked, we can fetch relevant documents from:

- A vector database

- Internal company documents

- A knowledge base

- A live search API

Those documents are inserted into the prompt. The model then reasons using its existing brain plus this fresh context.

Nothing inside the model changes. The weights stay untouched.

From a technical mindset, you can think of this as runtime augmentation versus compile-time learning.

Fine-tuning is changing the compiled program.

RAG is injecting additional memory while the program runs.

And this is powerful because it gives you:

- Fresh data

- Company-specific knowledge

- Zero retraining cost

- Faster iteration

Most businesses that think they need fine-tuning actually need good retrieval and better prompt design.

That architectural separation becomes even more critical in production environments where grounded, enterprise-grade RAG systems are required to eliminate hallucinations and ensure traceability.

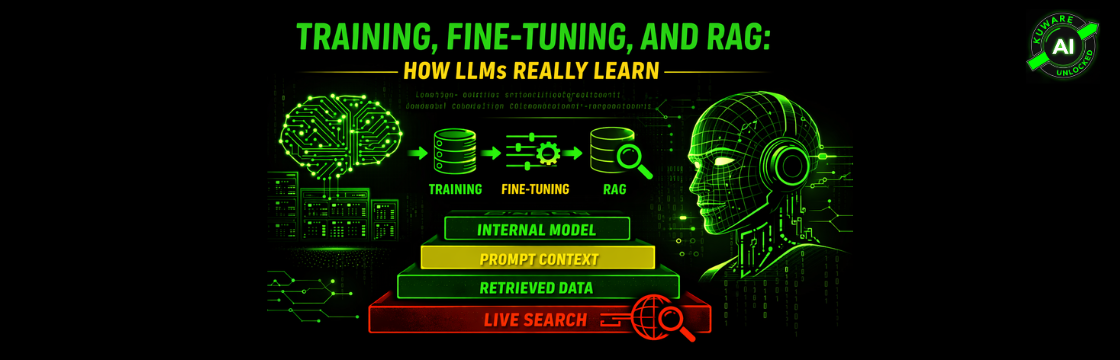

Now Let’s Talk About “Cache Hierarchy” (But in Plain English)

Here’s the mental model I like.

Imagine layers of knowledge, from closest to the model outward.

Layer 1: Internal Weights

This is what the model learned during training.

It’s always available. Fast. Instant.

But it’s frozen unless you retrain.

It’s always available. Fast. Instant.

But it’s frozen unless you retrain.

Layer 2: Prompt Context

Whatever you put directly in the prompt.

Instructions. Examples. Policies.

This is short-term working memory.

Instructions. Examples. Policies.

This is short-term working memory.

Layer 3: Retrieval (RAG)

Documents pulled from your database.

Relevant chunks fetched and inserted.

This expands what the model can see.

Relevant chunks fetched and inserted.

This expands what the model can see.

Layer 4: External Systems

Live search. APIs. Databases.

Slower. More dynamic. Potentially unlimited.

Slower. More dynamic. Potentially unlimited.

Each layer adds more information, but usually increases latency and complexity.

The smartest systems don’t just retrain everything. They design this hierarchy intentionally.

You ask:

- What should live permanently inside the model?

- What should be passed in the prompt?

- What should be retrieved?

- What should be fetched live?

That’s architecture thinking at the system level.

And that’s where business strategy actually lives.

So Where Should You Invest?

If you’re a $1M to $50M business, the real leverage is usually not in building a model from scratch.

It’s in:

- Smart AI assessment

- Identifying repetitive knowledge workflows

- Designing retrieval pipelines

- Layering prompt logic properly

- Adding governance and monitoring

This aligns directly with how we approach AI consulting in the business world .

The goal is not to say “we trained our own model.”

The goal is to say:

“Did this reduce cost?

Did this increase revenue?

Did this improve customer experience?”

Did this increase revenue?

Did this improve customer experience?”

If the answer is yes, then the architecture choice was correct.

One Final Thought

There’s a temptation in AI right now to go straight to the most advanced thing.

Let’s train a model.

Let’s fine-tune everything.

Let’s build our own LLM.

Let’s fine-tune everything.

Let’s build our own LLM.

Slow down.

Most problems are solved one layer up the hierarchy, not at the deepest level.

Start with retrieval.

Understand prompt engineering deeply.

Only fine-tune when reasoning itself needs reshaping.

Only train from scratch if you are doing research.

Understand prompt engineering deeply.

Only fine-tune when reasoning itself needs reshaping.

Only train from scratch if you are doing research.

And if you want to go deeper technically, I always recommend getting hands-on with small toy models first. Build one. Train one. Watch how learning rate affects behavior. It changes how you think about all of this.

This blog follows the writing principles we use for authentic, human AI education , because if you’re going to work with AI seriously, you need clarity, not buzzwords.

At Kuware.com, we’re not interested in hype.

We’re interested in leverage.

Unlock your future with AI or risk being locked out.

Make the choice now.