What is A/B testing?

Do you remember when you were supposed to go on that date you had been looking forward to and needed clarification about which outfit would suit you the best? So you tried both of them and asked your friends to tell you which one looked the best? A/B testing is pretty much like that, too.

A/B testing, also sometimes known as AB split testing, is a marketing study in which you divide your audience and test multiple campaign variations to see which performs best. This method can help you compare the effectiveness of two different website versions, emails, ad copies, popups, landing pages, and more.

Whether you are a seasoned marketer refining your strategies or a product manager curious about user preferences, you must split test assets in everything from outreach emails to content marketing to social media marketing. AB testing is your gateway to optimizing your landing pages, enhancing your marketing strategy, and amplifying your market reach with targeted pay-per-click campaigns and conversion rate optimisation techniques. An AB split test can not only clarify which call to action resonates but also enhance your lead generation efforts, from paid search ads to email deployments.

It is essential in a digital marketing world where data-driven marketing supports business decisions. Running an A/B test, that compares a variation to the existing landing page or email campaign allows you to ask specific questions about changes to your website or app and then collect statistics on the impact of that change.

A/B testing adds so much value to almost everything it’s applied to because different audiences behave differently, and something that works for one business might not work for the other. So, with A/B testing, you can quickly and efficiently catch on to the most effective factors in your lead generation and conversion rate optimization.

How Does A/B Testing Work?

Well, it’s all in the magic of testing and tweaking. Enhancing your marketing strategy isn’t just about throwing spaghetti at the wall to see what sticks—it’s about being the chef who knows exactly why one type sticks better than another when devising marketing campaigns.

To conduct an A/B test, you must create two different versions of the same piece of marketing content, each with a variable change. Then you’ll show these two versions to two audiences of similar size (also similar in their characteristics like demographics, etc.) and see which one performed better over a set time period using tools like HubSpot’s A/B Testing Kit or Google Optimize.

How to Do A/B Testing

The first step in planning an A/B test is identifying what you want to test. Are you doing an on-site or off-site test? To perform an A/B test effectively, you should be able to clearly differentiate between version A and version B. You must first decide which item to test and then create version A, which may be something that has done well in the past, and version B with minor changes to one of the elements.

The two versions are then sent to different categories of audiences, depending on demographics, interests, or roles. You can then begin tracking their performance to see if version A outperforms version B or vice versa.

AB-Testing: Before the Test

Here are a few things that you should never miss while running an A/B test:

- Know your objectives: Before doing an A/B test, you should always set goals. Do you want to boost traffic? Or sales? You should be able to lay out your objectives ahead of time to know which elements to test and what adjustments to make accordingly.

- Select the page for testing: Always begin with the most important page. It could be the homepage of your website or a landing page. Whatever you choose, make sure it includes all of the information a customer would need.

- Choose an element you want to test: You can use A/B testing to test on a single element, such as a call-to-action button, a headline, or a picture; you can keep the test simple while identifying the variation easily. However, make sure that the element you wish to test is related to the metric you want to evaluate. For example, if you’re trying to generate sales, emphasize your headline or CTA.

- Have a “Control” and a “Challenger”: Till now, you have decided your objectives, the element you want to test and the page you want to test it on. It’s now time to set up a control and a challenger version of your scenarios. The control scenario is the existing case of this element. The header image or the subject line you have been using till now. If you are beginning with a new element, the control scenario is the most obvious solution you design. The challenger, on the other hand, is the altered website, landing page, or email that you’ll test against your control.

- Choose an A/B testing tool: You can immediately track the progress and perforhttps://kuware.com/blog/a-practical-guide-to-a-b-testing/mance of your A/B test using the most powerful marketing tools. Invest in one of the best testing tools on the market to simplify your tasks and speed up the entire process of your marketing campaigns.

- Allocate the correct sample sizes: In AB testing, it’s of paramount importance to select and cordon off your sample sizes in accordance with what you want from your test. If done incorrectly, this can skew the results and not produce any kind of an objective conclusion whatsoever.

- Schedule the Test: Another factor that determines whether your AB test can be a surprising revelation or an exercise in futility is the time frames you schedule the variations to run in. If you decide to run the two variations in different time windows, make sure that the variable you are testing is time itself. In any other scenario, select a timeframe when you can expect similar traffic to both portions of your split test.

- Run the Test: Once you are done designing your variation/options, it’s time to run some tests by sending the two versions to two distinct groups of people. Visitors to your website or app will be randomly assigned to any of the two variants of the sample. Their involvement with each activity is measured, counted, and compared to evaluate how well each option performs.

- Analyze the data and statistics: Once your A/B test is up and running, you can monitor its progress and determine which of the two versions ends up outperforming the other or if the two are producing the same results. Conclusions should be made depending on which type of variation wins or which version your target audience prefers more.

AB-Testing: After the Test

- Review & compare your target metric: The first step after you have finally completed your AB test is to check your key metric, usually conversion rate, but it can be a variety of different indicators depending on your objectives. You will need to check this for both your test groups to figure out which one fared better.

- Group your audiences for further examination: To gain deeper insights, analyze your results by audience segment, regardless of significance, to understand how each segment responded to your variations. Segmenting can be done based on:

- Visitor type: Great for finding out what works best for new versus returning visitors.

- Device type: To understand what works better on mobile devices vs what works best for laptops and PCs.

- Traffic source: Identify the performance metrics based on where the audience belongs.

A/B Testing Examples

Here are a couple of personal examples that I think will help you understand the practical aspects of AB testing with much more clarity.

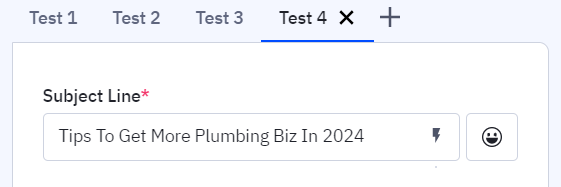

Subject Lines

We have been advertising experts in our niche for over a decade. With such an extended history of marketing aptitude to back us up, I decided to roll out a weekly newsletter on the best practices in plumbing marketing. Six months in, we were facing a dilemma. While the initial response had been great with double digit open rates (over 20%), we kept registering a steady decline of 1-2% every month, with more and more people unsubscribing from every newsletter.

I sat down with our head of marketing, Justin, to put a lid on this. Half an hour later, we had decided to A/B test multiple subject lines for the newsletter. With our usual subject line as the control group and three challengers, we undertook a complete split test.

With the control group outperforming all three challengers, we had a better understanding of what works with our target audience of plumbing business owners.

3 months and a lot of subject lines later, we still test at least 1 newsletter every month. Our open rates have never been higher (over 30%!) and we continue to test and optimize everything, from content to covers, in our newsletters.

FB Ad Creatives

With our firm organizing the first large-scale plumbing webinar ever, we decided to reel in as much audience for the momentous event as possible. For this, we decided to split test the ads with multiple creatives.

Here, in our control scenario, we kept the creative with a human

element, while in the challenger one, we let GPT create a plumber for us.

While both copies performed well, the control case, as per our hypothesis, did manage to outdo the second copy by over 2% in CTR.

Tools for A/B testing

Here is a list of top 10 tools you would want to consider to get your A/B Testing done:

- Google Optimize

- HubSpot’s A/B Testing Kit

- Freshmarketer

- VWO

- Optimizely

- Omniconvert

- Crazy Egg

- AB Tasty

- Convert

- Adobe Target

Have something more to add? Or did we miss anything? Let us know in the comments below.